MySQL router high availability for InnoDB cluster

How to setup MySQL router with High availability for InnoDB cluster?

MySQL router deployed as part of an Innodb cluster must be able to withstand any failures as it acts as the middle man between application and database cluster. There are two options when installing the routers,

- Install the MySQL routers on application servers, so the routers are a part of application availability it self. MySQL recommends this as the default installation method in the router FAQ section as well. Ref - https://dev.mysql.com/doc/mysql-router/8.0/en/mysql-router-faq.html#faq-mysql-router-how-install

- Install MySQL routers on separate servers.

Option two can be used based on the specific design consideration of a given deployment. As an example there are some deployments where router servers are deployed in separate network segment from databases clusters and application servers. When routers are deployed separately it is a must that they are highly available. No matter running a multi node Innodb cluster which accepts connections via single MySQL router which can be the single point of failure for the whole application.

Making MySQL router deployments highly available

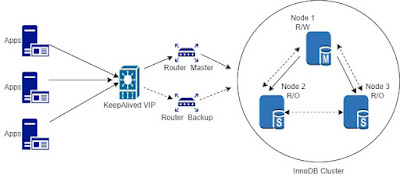

A solution that cater multiple routers to accept connections require all the router servers to be accessible from a single access name or IP from the application. Incomes - Keepalived, which can provide a VIP to operate in front of the router server. With the introduction of Keepalived application connection flow to database is below.

Setting up Keepalived for MySQL router high availability

The example below is based on Innodb Cluster setup discussed in http://dbtechno.blogspot.com/2022/01/mysql-innodb-cluster-in-15-minutes.html. Below are the IP addresses for each of the components.

- Cluster database IPs - 192.168.56.121, 192.168.56.122, 192.168.56.123

- Router 1 (Master) - 192.168.56.100

- Router 2 (Backup) - 192.168.56.201

- Keepalived VIP - 192.168.56.77

vrrp_instance VI_1 {

state MASTER

interface enp0s8

virtual_router_id 50

priority 102

advert_int 1

virtual_ipaddress {

192.168.56.77

}

}vrrp_instance VI_1 {

vrrp_instance VI_2 {

state BACKUP

interface enp0s8

virtual_router_id 51

priority 101

advert_int 1

virtual_ipaddress {

192.168.56.77

}

}track_script can be included in the configuration but not mandatory.

Testing the MySQL router failover

With Keepalived service started on both router servers, router high avilability testing can be done using simple database insert script. Here Python insert script which logs the source hostname with timestamp is used.

Create users, database and privileges for test insert script.

create user testuser@192.168.56.100 identified by 'p@ssword%df31';

create user testuser@192.168.56.201 identified by 'p@ssword%df31';

create database db1;

grant all on db1.* to testuser@192.168.56.201;

grant all on db1.* to testuser@192.168.56.100;

import mysql.connector

import time

#from time import time

from datetime import datetime

tries = 4

while tries > 0:

tries -= 1

try:

connection = mysql.connector.connect(host='192.168.56.77',

port='6446',

database='db1',

user='testuser',

password='p@ssword%df31')

mySql_insert_query = """INSERT INTO timing (timet)

VALUES

(now()) """

for i in range (1, 101):

t1 = time.localtime()

cursor = connection.cursor()

cursor.execute(mySql_insert_query)

connection.commit()

t2 = time.localtime()

print(cursor.rowcount, "Record inserted successfully into timing table",

time.strftime("%I:%M:%S %p", t1),time.strftime("%I:%M:%S %p", t2),

connection.server_host)

time.sleep(1)

cursor.close()

except mysql.connector.Error as error:

if tries == 0:

print("Failed to insert record into timing table {}".format(error))

break

else:

print "Try reconnect.."

connection.reconnect()

finally:

if connection.is_connected():

connection.close()

print("MySQL connection is closed")

Conclusion

As demonstrated above MySQL router can be configure to ensure high availability when deployed separately from application server using VIP provider. Another possible deployment scenario is when application are hosted on containerized environment. In such setup router can also be deployed in containerized setup as Kubernetes pod as well. Planning to provide an example in another post.

Comments

Post a Comment