MySQL InnoDB Cluster in 15 minutes

What is MySQL innodb cluster

MySQL innodb cluster is a high availability solutions provided by MySQL which includes both database service auto failover and Client auto failover. It utilize multiple technologies underneath to ensure its availability targets.- Group Replication -- Ensure distributed consensus, Make sure all member of the cluster agrees same state transaction wise.

- MySQL Shell -- Provide cluster maintenance and monitoring interfaces.

- MySQL Router -- Manges client failovers, provide a single access point for clients.

Setting up innodb cluster

Although a complete solution, quickly setting up cluster at least for testing purposes can be challenging using the normal process. This is mainly because it involves different technologies and the requirement of setting up at least 3 nodes. Hence we can use below Vagrant and Virtual box based solution to setup MySQL innodb cluster rapidly also with the reusability.InnoDB cluster using Vagrant and Virtual box

- Install below Vagrant and Virtual box versions, specific version requirement is a limitation on the current vagrant box used

- VirtualBox - 5.1.38 - https://www.virtualbox.org/wiki/Download_Old_Builds_5_1

- Vagrant - 2.1.0 - https://releases.hashicorp.com/vagrant/2.1.0/

- Create empty folder in Windows, Using Windows cmd terminal execute below commands

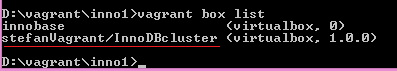

cd **Folder Created** vagrant box add stefanVagrant/InnoDBcluster --insecure vagrant box status

Output should be similar to below, - Download zip file from -- https://github.com/stefanhubb4/innoDBCluster_vagrant

- Deploy the cluster using

vagrant up

This will deploy four Oracle Linux 7.8 virtual machines with below configuration.

db nodes

192.168.56.121 - inno1

192.168.56.122 - inno2

192.168.56.123 - inno3

router

192.168.56.100 - router

You can verify the deployment usingvagrant status, and the output should be similar to below. - Testing the cluster Below credentials can be used to check the status of deployed cluster.

- Testing the client connectiity In order to check client connectivity ssh to mysql router server, bootstrap and start the router using below steps. Once router is started connect to innodb read write or read only ports and verify.

server root password - vagrant

mysql root password - G0w@y#3031!

mysql clususer password - G0w@y#4041!

MySQL JS > shell.connect('clususer@inno1');

Creating a session to 'clususer@inno1'

Please provide the password for 'clususer@inno1': ***********

Save password for 'clususer@inno1'? [Y]es/[N]o/Ne[v]er (default No):

Fetching schema names for autocompletion... Press ^C to stop.

Your MySQL connection id is 33

Server version: 5.7.35-log MySQL Community Server (GPL)

No default schema selected; type \use <schema> to set one.

<ClassicSession:clususer@inno1:3306>

MySQL inno1:3306 ssl JS > var c = dba.getCluster('testcluster');

MySQL inno1:3306 ssl JS > c.status()

{

"clusterName": "testCluster",

"defaultReplicaSet": {

"name": "default",

"primary": "inno1:3306",

"ssl": "REQUIRED",

"status": "OK",

"statusText": "Cluster is ONLINE and can tolerate up to ONE failure.",

"topology": {

"inno1:3306": {

"address": "inno1:3306",

"memberRole": "PRIMARY",

"mode": "R/W",

"readReplicas": {},

"role": "HA",

"status": "ONLINE",

"version": "5.7.35"

},

"inno2:3306": {

"address": "inno2:3306",

"memberRole": "SECONDARY",

"mode": "R/O",

"readReplicas": {},

"role": "HA",

"status": "ONLINE",

"version": "5.7.35"

},

"inno3:3306": {

"address": "inno3:3306",

"memberRole": "SECONDARY",

"mode": "R/O",

"readReplicas": {},

"role": "HA",

"status": "ONLINE",

"version": "5.7.35"

}

},

"topologyMode": "Single-Primary"

},

"groupInformationSourceMember": "inno1:3306"

}

MySQL inno1:3306 ssl JS > \quit

As per the output 3 node MySQL innodb cluster with single primary is deployed.

[root@router ~]# sudo mysqlrouter --bootstrap clususer@inno1:3306 --user=mysqlrouter

Please enter MySQL password for clususer:

# Bootstrapping system MySQL Router instance...

- Creating account(s) (only those that are needed, if any)

- Verifying account (using it to run SQL queries that would be run by Router)

- Storing account in keyring

- Adjusting permissions of generated files

- Creating configuration /etc/mysqlrouter/mysqlrouter.conf

Existing configuration backed up to '/etc/mysqlrouter/mysqlrouter.conf.bak'

# MySQL Router configured for the InnoDB Cluster 'testCluster'

After this MySQL Router has been started with the generated configuration

$ /etc/init.d/mysqlrouter restart

or

$ systemctl start mysqlrouter

or

$ mysqlrouter -c /etc/mysqlrouter/mysqlrouter.conf

InnoDB Cluster 'testCluster' can be reached by connecting to:

## MySQL Classic protocol

- Read/Write Connections: localhost:6446

- Read/Only Connections: localhost:6447

## MySQL X protocol

- Read/Write Connections: localhost:6448

- Read/Only Connections: localhost:6449

[root@router ~]# mysqlrouter &

[1] 4811

[root@router ~]# logging facility initialized, switching logging to loggers specified in configuration

[root@router ~]#

[root@router ~]# mysql --user=clususer --password --host=localhost --port=6446 --protocol=tcp

Enter password:

Welcome to the MySQL monitor. Commands end with ; or \g.

Your MySQL connection id is 137

Server version: 5.7.35-log MySQL Community Server (GPL)

Copyright (c) 2000, 2021, Oracle and/or its affiliates.

Oracle is a registered trademark of Oracle Corporation and/or its

affiliates. Other names may be trademarks of their respective

owners.

Type 'help;' or '\h' for help. Type '\c' to clear the current input statement.

mysql>

Conclusion

As demonstrated above using Vagrant and Virtualbox reduce the deployment time for MySQL innodb test cluster drastically.Also this allows easy failover testing as cluster can be reinitiated to original status by just using

vagrant destroy and vagrant up.

Great collection and thanks for sharing this info with us.

ReplyDeleteMysql DBA Course

Impressive Article . Thanks for sharing the information.

ReplyDeleteSnowflake Online Training Course

Snowflake Training

Snowflake Online Training

Snowflake Online Course Hyderabad

Snowflake Training in Hyderabad

SnowFlake Online Certification Training

Snowflake Training in Ameerpet

Snowflake Online Training in India

Snowflake Training Insititue in Hyderabad